Toying with an idea for an ideation platform

Cheatstorm technique of ideation:

Faste, Haakon, et al describe novel method of ideation called Cheatstorm. Cheatstorm involves usage of ideas resulting from many brainstorming sessions and randomly combining them to produce new innovative ideas. Their research shows that for distributed collaborative teams these techniques maybe well suited.

The paper claims that ideation need not require the generation of new ideas. Rather, formation of new ideas can be done in more managed methods using Cheatstorming. Random ideas are presented as "idea seeds" and then participants generate new ideas by combining these "idea seeds". The paper claims that ideation involves the sharing and interpretation of concepts in unintended and (ideally) unanticipated ways. Thus when the participants have to use unrelated idea seeds to build a new idea, they would be forced to make a creative connection between the

Finding a collection of idea seeds

Reddit has thousands of "subreddit"s which are independent forums about specific topics. Submissions to these forums have a score calculated using the votes received, views obtained and comments written in response. Thus a corpus with ideas and it's score can be generated using Reddit. Some subreddits where users posts ideas are:

- Lightbulb: Users post their ideas and inventions. (Another subreddit called Ideas was merged with this subreddit)

- CrazyIdeas: Users posts less realistic ideas. This forum claims "There are no wrong ideas!"

- ShowerThought: Users post their thoughts had during routine or mundane tasks. Although the posts are not specific to ideas, it has significantly more submissions.

- Some more: SomebodyMakeThis, AppIdeas, somebodycodethis and Startup_Ideas.

A problem with this approach is that full sentences are obtained from this approach. A phrase is better suited to be an idea seed. We will need to find a method to extract only the key-phrases from these sentences.

Using PRAW for building a Corpus

Reddit provides an API for interacting with their website. A Python wrapper for this API called PRAW simplifies the process by handling tasks such as authentication and network requests. Here is a code snippet to get the title, content and score of submissions during a number of days:

now = datetime.datetime.utcnow() earlier = now - datetime.timedelta(days = days_ago) later = earlier + datetime.timedelta(days = time_step) earlier_timestamp = calendar.timegm(earlier.utctimetuple()) later_timestamp = calendar.timegm(later.utctimetuple()) submissions = subreddit.submissions(start=earlier_timestamp, end=later_timestamp) posts = [] for submission in submissions: title = submission.title text = submission.selftext score = submission.score posts.append((title, text, score))

Rest of the Python script along with the function above download five years of submissions from a specified subreddit. Since some subreddits have high amounts of data, it nicer to download submissions from only a few days at a time, so it can saved to the database in smaller segments. The script starts from five years in the past and iterates forward in time till the latest submissions can be downloaded. I used SQLite as the database for this purpose as it is fast and lightweight.

Extracting key-phrases from sentences

Key-phrases have to be extracted from these sentences of the Reddit submissions. As with any NLP corpus, we have to handle stop words. "Stop words" are the most common words in a language and usually are not key-phrases. Discarding them before the keyword extraction step will be useful.

tf–idf is the "vanilla" method of finding keywords in some given text. This works on a simple statistical method. tf-idf didn't provide very good results upon experimentation. I suppose, this is because a larger document of text is required and the one or two sentence from the Reddit submissions didn't work well.

When looking around, I found the Rapid Keyword Extraction (RAKE) algorithm. It works well with extracting key-phrases from individual documents. Also, it is on the lighter side in resource usage compared to methods involving machine learning. rake-nltk is a Python library which implements RAKE and uses NLTK's features for doing so. Here is a snippet from a script to obtain key-phrases using rake-nltk:

def get_keywords(text): """Takes text and returns the keywords""" if text == "": return None from rake_nltk import Rake r = Rake() # Uses stopwords for english from NLTK, and all puntuation characters. r.extract_keywords_from_text(text) return r.get_ranked_phrases()

The results seem interesting:

Next, these ideation prompts have to be used in an interface for brainstorming. Let's cover that in the next blog post.

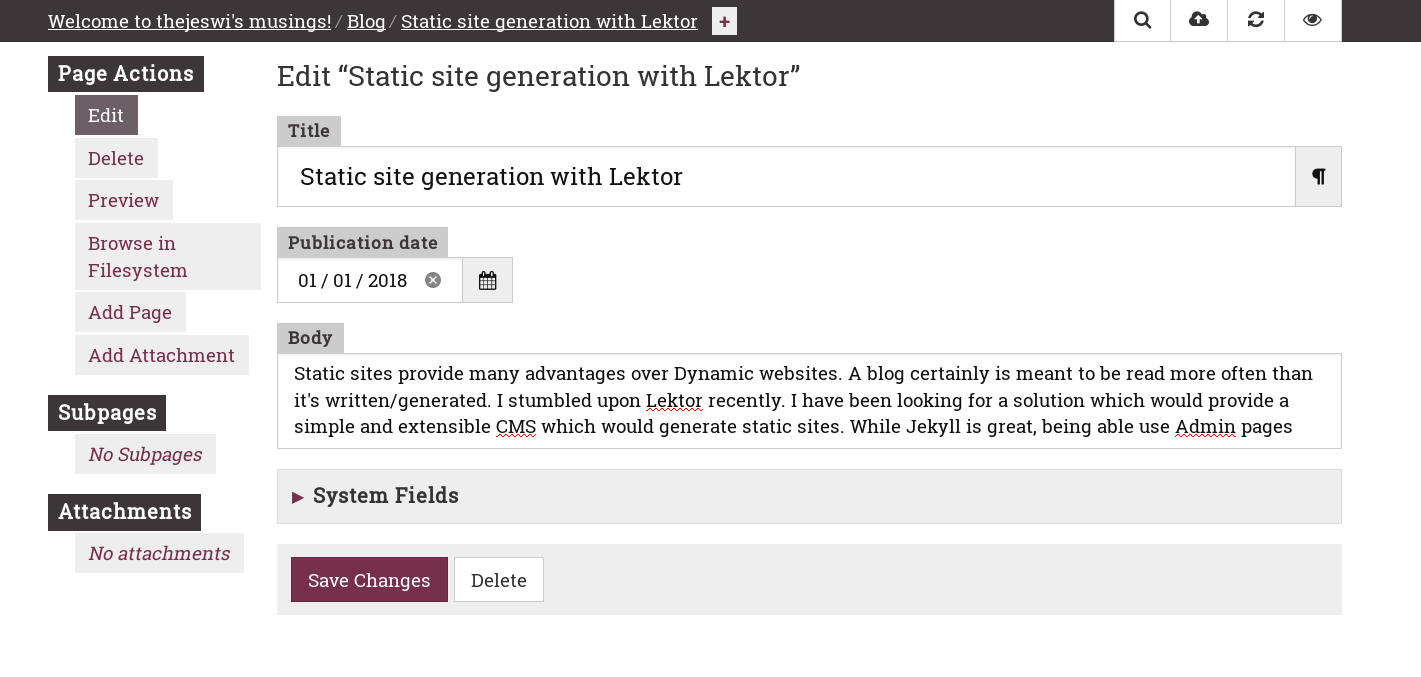

Static site generation with Lektor

Static sites provide many advantages over dynamic websites. For instance a blog is meant to be read more often than it's written/generated, lot of processing is performed with every page request in a CMS such as Wordpress. I have been looking for a solution which would provide a simple and extensible CMS which would also generate static pages. While Jekyll is great, having a CMS is more convenient.

I stumbled upon Lektor recently and it works great for this purpose. One can use the admin page to add or modify pages and publish. Publishing is done with one click. It generates the static files and deploys using travis-CI.

I hope to use this site to regularly work about things I find interesting. I hope to see you around!